Sl no.

|

Particulars

|

|

|

1

|

Please specify the type of application you wish to Performance test.

Eg: Web,

|

Type of mobile app

Web/Native/Hybrid

|

2

|

What are the supported Mobile Operating Systems? Please mention the

version of supported OS.

Eg: Android / iOS / Blackberry / Windows

|

3

|

In which technology/platform the application is developed?

E.g. Java, HTML5, J2EE, .Net, PHP

|

4

|

Specify the client to first server communication (protocol)

Eg:

o HTTPS

o Web Service

o Citrix

o Mobile Application – HTTP(s)

o Others – Please specify

|

5

|

Type of Web application (Browser based / Client Server / Others)

|

o If others, pls. specify:

|

o Application accessibility :

(Intranet / Internet / Both)

|

6

|

Application security feature: (Encryption/Signature/OTP/TFA/Others)

o If others, please specify

|

o Is the application end to end encrypted? (Client to server traffic

sent in encrypted format or plain text)

|

o Can the security feature be disabled during performance testing

activity?

|

7

|

Can same user login to the application from multiple mobile devices?

|

8

|

Will separate test environment be provided to do a performance test

run?

|

9

|

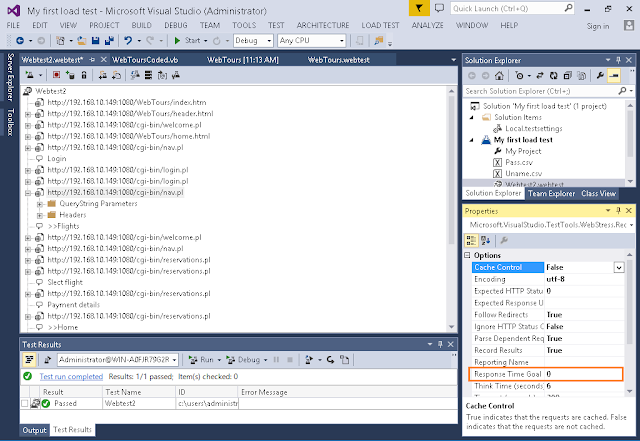

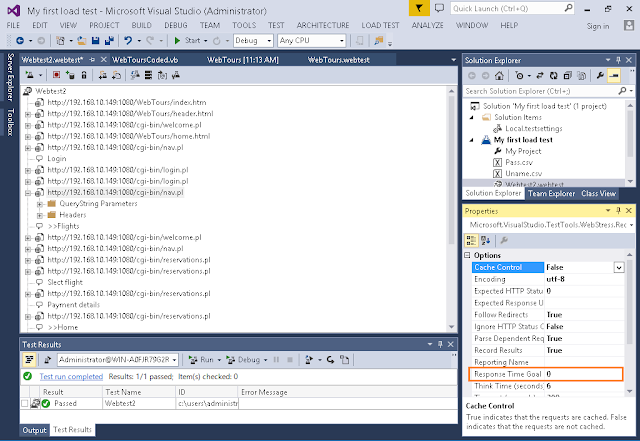

Please specify if there is any preference on Performance Testing

Tools.?

Eg: LoadRunner, Visual Studio, Jmeter

|

10

|

Do you allow usage of open source tools, if proved compatible with the

application?

E.g. Jmeter

|

11

|

Performance monitoring: During execution, can your Dev/IT team

continuously monitor the application servers?

|

12

|

What is the current project lead time for performance testing

activities?

I.e. starting and completion date

|

13

|

Is the functional testing completed? Are all functional testing

defects fixed?

|

14

|

Is there any known issue(s) in this application?

E.g.

o Memory lock

o Unexpected growth in daily visitors

o More response time which leads to time out error

|

15

|

Can the Load Injector machine(s) be placed in the same subnet as the

1st Tier of the application: (Yes / No)

o If No, pls. suggest an alternative to obtain a minimum of 100 Mbps

connectivity between the load injector & 1st Tier:

o If Yes, pls. specify whether load

injector m/c will be available 24 * 7

OR only for particular time period in the day:

|

|

|

16

|

Which data base is used?

E.g. Oracle, MySQL, SQL Server

|

17

|

Which Application server is running with the system?

E.g. Tomcat, IIS, WebSphere

|

18

|

How is the targeted application look like? (Please specify all servers

and network appliances configurations and their interaction mechanism)

o LAN/WAN details

o Terminal servers

o Bandwidth link

o Load Balancing techniques

o Batch Transactions

o Disaster recovery

|

19

|

Please spevify the Application Architecture : (2 Tier / 3 Tier / More

than 3 Tier)

|

o Pls. share the network architecture. This is required to understand

the application flow. If not possible (due to company policy), pls. share the

raw diagrammatic view of the application flow

|

o Type of servers involved (pls. give details with version): (eg. Web

-> IIS 6.0; App -> Web sphere app. server 6.1; Database -> Oracle 10g)

|

20

|

During Test execution will there be any type of firewall involved

between any tier (incl. client m/c & 1st Tier): (Yes / No)

|

21

|

Please specify availability of following team during performance

testing for any assistance required

o Application (including DB for creating Test data etc.)

o Network

o Any third-party support (if involved)

|

22

|

Is this application integrated with any third-party software? If yes,

will this be isolated during performance testing?

|

23

|

Arrangements for performance testing environment and its scale

compared to the Production Environment. (e.g 1/2 of Prod or 2/3 of Prod or if

1:1 is feasible?)

|

|

|

24

|

Please specify the type of performance testing you wish to conduct?

o Load Test – Normal and Peak load

o Stress Test

|

25

|

Please specify if anything other than load and stress is required.

o SOAK/Endurance

o Spike

|

26

|

What is the expected Peak/concurrent user load for testing?

|

27

|

"Business process (Scenario):

Number of critical business processes to be considered for performance testing.

(The number of processes needs to be identified based on 80 – 20 rule i.e.

using 20% of critical scenarios 80% of load needs to be stimulated)"

|

28

|

List out the scenarios considered for performance testing?

|

29

|

Is any File upload activity involved : (Yes / No)

|

30

|

If selected scenarios require some unique inputs then these should be

specified.

E.g. Credit Card, SSN etc.

|

31

|

What are the goals/SLA of the performance testing activity?

o Response Time (E.g. search should not take more than 3 seconds)

|

o User load (E.g. application should be able to handle 500 concurrent

users)

|

o Transaction Rate (E.g. application must be able to handle 50

transactions per second)

|

o Hardware Resource Utilization (E.g. CPU utilization on application

server should not exceed 70%)

|

o Pass percentage of scenarios

|

32

|

What is the expected throughput of the application?

E.g. 1000 Financial transaction per minute

|

33

|

User Roles involved:

o For the Key scenarios identified for testing, is any user role required

(Maker / Checker)?

o If user roles involved, then is Landing screen different for user roles?

|

34

|

Is it required to generate load from multiple geographical regions? If

yes, which?

|

35

|

If required, is it possible to create dummy data for testing directly

from backend?

(Yes / No)

|

36

|

If required, can the application be made available or accessed from

Testhouse for conducting a feasibility study or for a POC?

|

37

|

What is the preferred engagement model?

Onsite/Offshore/Hybrid (Onsire-Offshore)

|

38

|

Are there any time constraints for running the test?

E.g. the server can only be accessed outside business hours; server can only

be accesses from 7 pm – 8 am

|